(San Francisco) Earlier this year, Mark Austin, vice president of data science at AT

That’s a big change, Austin thought. But since ChatGPT is public, he wondered if it was safe for businesses to use it.

In January, AT

Forms that previously took hours to complete take two minutes with Ask AT

Like AT

Faced with this new demand, technology companies are rushing to launch generative AI products for enterprises. Since April 1, Amazon, Box, and Cisco have announced generative AI-powered products that will produce code, analyze documents, and summarize meetings. Salesforce has also just launched generative AI products used in sales, marketing and in its Slack messaging service. Oracle has announced an AI product in human resources.

These companies are increasing their investment in AI development. In May, Oracle and Salesforce Ventures, the venture capital arm of Salesforce, invested in Cohere, a Toronto-based startup specializing in generative AI in business. Oracle also resells Cohere’s technology.

Generative AI “is a breakthrough in enterprise software,” says Aaron Levie, CEO of Box.

Many of these tech companies emulate Microsoft, which has invested $13 billion in OpenAI, the creator of ChatGPT. In January, Microsoft made the Azure OpenAI service available to its customers, giving them access to OpenAI’s technology to build their own versions of ChatGPT. As of May, the service had 4,500 customers, said Microsoft vice president John Montgomery.

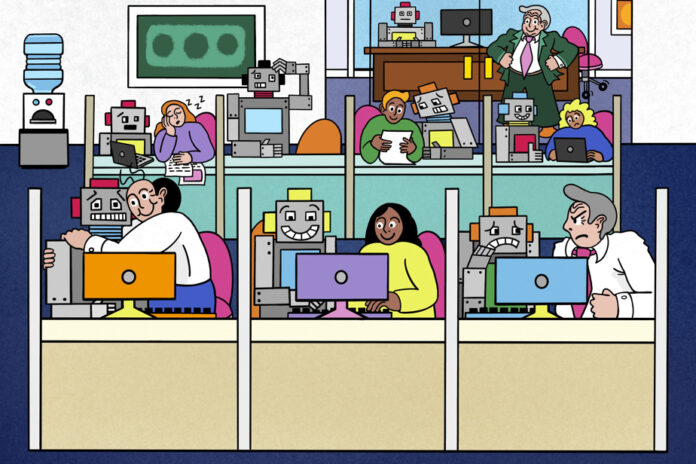

Basically, tech companies are currently releasing four types of generative AI products for business, which are features and services that: generate code for software engineers; create new content such as sales emails and product descriptions for marketing teams; seek corporate data to answer employee questions; summarize meeting notes and long documents.

These tools will be “used by people to accomplish what they’re already doing,” says analyst Bern Elliot, vice president of IT research and consulting firm Gartner.

But generative AI at work comes with risks. AI bots can produce inaccuracies, provide inappropriate answers, and make data leaks possible. And AI remains largely unregulated.

Faced with these challenges, technology companies have taken action. To prevent data leaks and increase security, some offer generative AI products that do not retain customer data.

When Salesforce launched AI Cloud, a service comprising nine generative AI products for enterprises, in June, the company included a firewall meant to hide sensitive company information and prevent leaks. Salesforce promises that what users type into AI Cloud will not be used to enrich the underlying AI.

Similarly, Oracle claims that customer data will be kept in a secure environment while training its AI model and promises that it will not be able to see this information.

Salesforce offers AI Cloud starting at US$360,000 per year, with the cost increasing with usage. Microsoft charges for the Azure OpenAI service based on the version of OpenAI technology chosen by the customer and the volume of usage.

For now, generative AI is mostly used in low-risk work scenarios — not in highly regulated industries — with a human in the loop, points out Beena Ammanath, director of the Deloitte AI Institute research center. A recent Gartner survey of 43 companies indicates that more than half of respondents did not have an internal generative AI policy.

Panasonic Connect, a subsidiary of Japan’s Panasonic, started using Microsoft’s Azure OpenAI service in February to create its own chatbot. Today, his employees solicit him 5,000 times a day on topics as varied as writing emails or writing code.

Panasonic Connect thought its engineers would be the main users of the AI robot. In fact, litigation, accounting and quality assurance have also called on him to summarize legal documents, improve product quality and perform other tasks, says Judah Reynolds, marketing and communications manager at Panasonic Connect. .

“Everyone started using it in ways we didn’t anticipate,” he says. People really benefit from it. »