When Jennifer Watkins received a message from YouTube ordering the closure of her channel, she wasn’t worried. After all, she didn’t use YouTube.

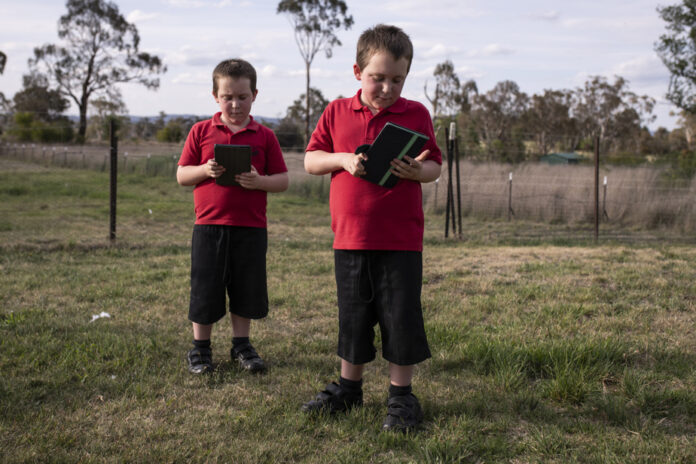

In contrast, her 7-year-old twins used a tablet connected to her Google account to watch children’s content and to upload their dancing antics to YouTube. Few of these videos have been viewed more than five times. The one that got Mrs. Watkins in trouble, made by one of her sons, was different.

YouTube, a Google subsidiary, has artificial intelligence systems that scan the hundreds of hours of videos uploaded every minute. Sometimes this examination goes wrong and identifies innocent people as child molesters.

The New York Times has documented other cases where parents’ digital lives were upended by naked photos and videos of their children, spotted by Google’s artificial intelligence and deemed illicit by human reviewers. Parents were the subject of a police investigation.

In Ms Watkins’ case, the boy’s “butt video”, posted to YouTube in September, was quickly flagged as possible child sexual exploitation, a violation of Google’s terms of service with serious consequences.

Ms. Watkins, a medical worker living in Australia, suddenly lost access to YouTube, but more importantly to all her Google accounts, including her photos, documents and email. She could no longer receive her work schedule, view her bank statements or “order an ultra-frappé” with the McDonald’s app through her Google account.

A Google login page informed her that her account was going to be deleted, but that she could appeal the decision. She clicked the “Request Call” button and wrote that her sons are 7, that it’s the age where “butts make you laugh,” and that they were the ones who posted the video.

Blocking her accounts “hurts her financially,” she added.

Child welfare organizations and elected officials around the world have been pushing tech companies to counter the spread of child pornography on their platforms by blocking this type of content. Many online services now scan photos and videos recorded and shared by users, looking for objectionable images that have already been reported to authorities.

Google also wanted to be able to detect new content. The company has developed an algorithm – trained from known images – supposed to identify previously unpublished exploitative content. Google made it available to Meta, TikTok and other companies.

The video posted by Ms Watkins’ son, identified by this algorithm, was later seen by a Google employee, who thought it was problematic. Google reported it to the National Center for Missing and Exploited Children (NCMEC), an organization charged by the US government with collecting reported content. (NCMEC can add the reported images to its database and decide whether it should be reported to the police.)

According to NCMEC statistics, Google’s platforms are among the most used to distribute “potential child pornography.” Google made more than 2 million reports last year, far more than most digital companies, but fewer than Meta.

(Experts say it’s difficult to gauge the severity of the phenomenon from numbers alone. Facebook experts scrutinized a small sample of reported images of children: they found that 75 percent of users who posted these images ” had no malicious intent.” Some of them were teenage lovers who shared intimate images. Other users had shared a meme showing a child’s genitals bitten by an animal “because they thought it was funny.”)

In fall 2022, Susan Jasper, Google’s director of trust and safety, announced that the company plans to update its appeals process to “improve the user experience” for people who “believe that [the company] has ] made bad decisions.” Google now provides explanations if it suspends an account, instead of, as before, a notice only stating a “serious violation” of the company’s rules. So, Ms. Watkins was informed that her account was blocked as a result of a video that fell under the heading of “child exploitation.”

Despite her repeated appeals, Ms Watkins’ appeal was unsuccessful. She had a paid Google account that allowed her to communicate with customer service, who ruled that the video, even though it was the innocent act of a child, still violated Google’s policies. company (the digital correspondence was reviewed by the Times).

This draconian punishment for a child’s escapade is unfair, says Watkins, who wonders why Google didn’t give her a warning before cutting off access to all her accounts and more than a decade of digital memories.

After more than a month of unsuccessful attempts with Google, Ms. Watkins wrote to the Times.

A day after the newspaper’s call, his Google account was reinstated.

“We do not want our platforms to be used to endanger or exploit children,” Google said, recalling the expectations of the public and authorities regarding “the strongest measures to detect and counter” sexual abuse inflicted on children. “In this case, we understand that the policy-violating content was not posted with malicious intent. » Google provided no explanation for why a rejected appeal cannot be reconsidered other than through the New York Times.

In such situations, Google is between the tree and the bark, notes Dave Willner, a researcher at the Cyber Policy Center at Stanford University who has worked on security at several large technology companies. Even if a photo or video is posted online without bad intention, it can be taken and shared for malicious purposes.

He said Watkins’ problems with Google after her accounts were blocked show that people should “spread out their digital lives” and not rely on one company for all their services.

Mr. Watkins learns another lesson from his mishap: children should not use the parents’ account. You must create a separate account for children’s internet activities – which Google recommends.

But not for her twins, says Watkins; in any case, not right away. After what happened, they are deprived of the internet.