In November 2022, Facebook’s parent company released Galactica, a chatbot. After a torrent of complaints that the robot was making up historical events and other nonsense, Meta removed it from the internet.

Fifteen days later, San Francisco-based OpenAI launched ChatGPT, which became a worldwide sensation.

Basically, the two robots were based on the same technology. But unlike Meta, OpenAI had refined its own using a technique that was just beginning to change how artificial intelligence is built.

In the months leading up to the release of ChatGPT, OpenAI hired hundreds of beta testers to provide specific suggestions that could improve its skills. Like an army of tutors guiding an elementary school student, these people showed the robot how to approach questions, evaluated its answers, and corrected its mistakes. By analyzing these suggestions, ChatGPT became a better bot.

These robots run on new systems capable of acquiring skills by analyzing data. Much of this data is found, refined, and in some cases created by an army of low-paid workers in the United States and other countries.

For years, the Googles and OpenAIs of the world have used these workers to prepare the data used to train AI. Indian and African workers have labeled everything from photos of stop signs (to train driverless cars) to signs of colon cancer (integrated into diagnostic technologies).

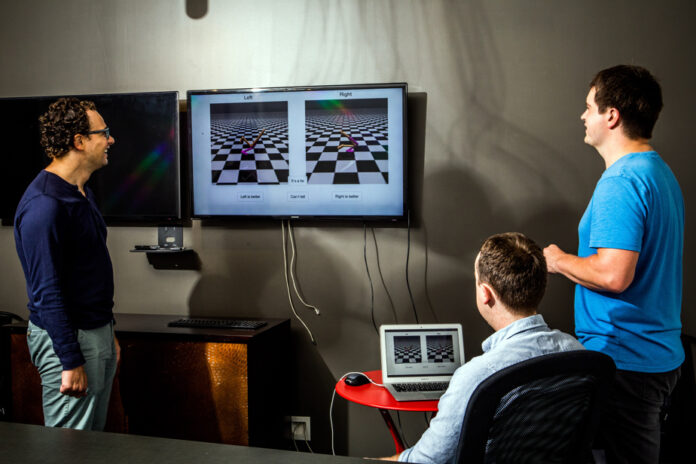

To create their robots, AI companies rely on such workers, but often better trained. Reinforcement learning from human feedback is much more complex than the simple data tagging that has fueled AI development in the past. Here they are tutors, giving the machine in-depth and specific feedback with the aim of improving its responses.

Last year, OpenAI and Anthropic recruited freelance workers in the United States on the Upwork site. Hugging Face, another leading lab, uses U.S. workers recruited by data collection contractors Scale AI and Surge.

According to Hugging Face researcher Nazneen Rajani, these workers are equally male and female, aged 19 to 62, and have education levels ranging from technical degrees to doctorates.

In the United States, these workers are paid between $15/hr and $30/hr. Those from other countries earn much less. When Hugging Face asked Amazon to provide workers, it was told that American staff would be five times more expensive than foreign staff.

This work requires hours of meticulous writing, editing and filing. It can take 20 minutes to write a single question and answer. It’s human feedback that allows today’s robots to approach a sustained conversation, instead of providing a single response. It also helps AI companies reduce misinformation, bias and other toxic content produced by their systems.

According to a recent study from Stanford and Berkeley universities, in recent months we have observed a decline in the precision of OpenAI technology in solving mathematical problems, generating computer code and reasoning. Perhaps this is an unwanted effect of human feedback.

Researchers don’t yet understand why, but improving the system in one area may make it less accurate in another.

“Fine-tuning the system can introduce additional biases – side effects – that cause it to drift in unexpected directions,” said James Zou, a computer science professor at Stanford.

In 2016, OpenAI researchers designed an AI system that taught itself how to play an old boat racing video game, Coast Runners. But to pick up the little green markers on the trajectory – the way to score points – the system steered the boat anywhere, sending it crashing into the dock, where it caught fire. He struggled to cross the finish line, which is as important as scoring points.

At OpenAI, we found a solution to this problem thanks to algorithms that can both learn tasks through data analysis and assimilate advice from humans. With just a few mouse clicks, a programmer was able to show the AI system that it needed to get to the finish line, not just accumulate points.

Around the same time, OpenAI, Google and other companies began building “large language models,” systems that learn from vast amounts of digital text on the Internet, including books, articles Wikipedia and discussion forums.

The result: systems like Meta’s Galactica that can write articles, solve math problems, generate computer code, and annotate images. As Galactica has shown, these systems can also generate false, biased or toxic information. To the question “Who runs Silicon Valley?” ”, Galactica replied: “Steve Jobs”.

Laboratories therefore began to refine large language models using the same remedy that OpenAI used on the old nautical video game. The result: advanced bots like ChatGPT.

Ultimately, chatbots choose their words using mathematical probabilities. So human feedback can’t solve all their problems, and it may change their performance in unexpected ways.

Yann LeCun, scientific director of AI at Meta, believes that a new technique must be developed before robots can be completely reliable. Human feedback “works surprisingly well, in that it helps prevent bad things from happening,” he said. “But she’s not perfect. »